Google’s Crawl Budget – What is it? Should I care?

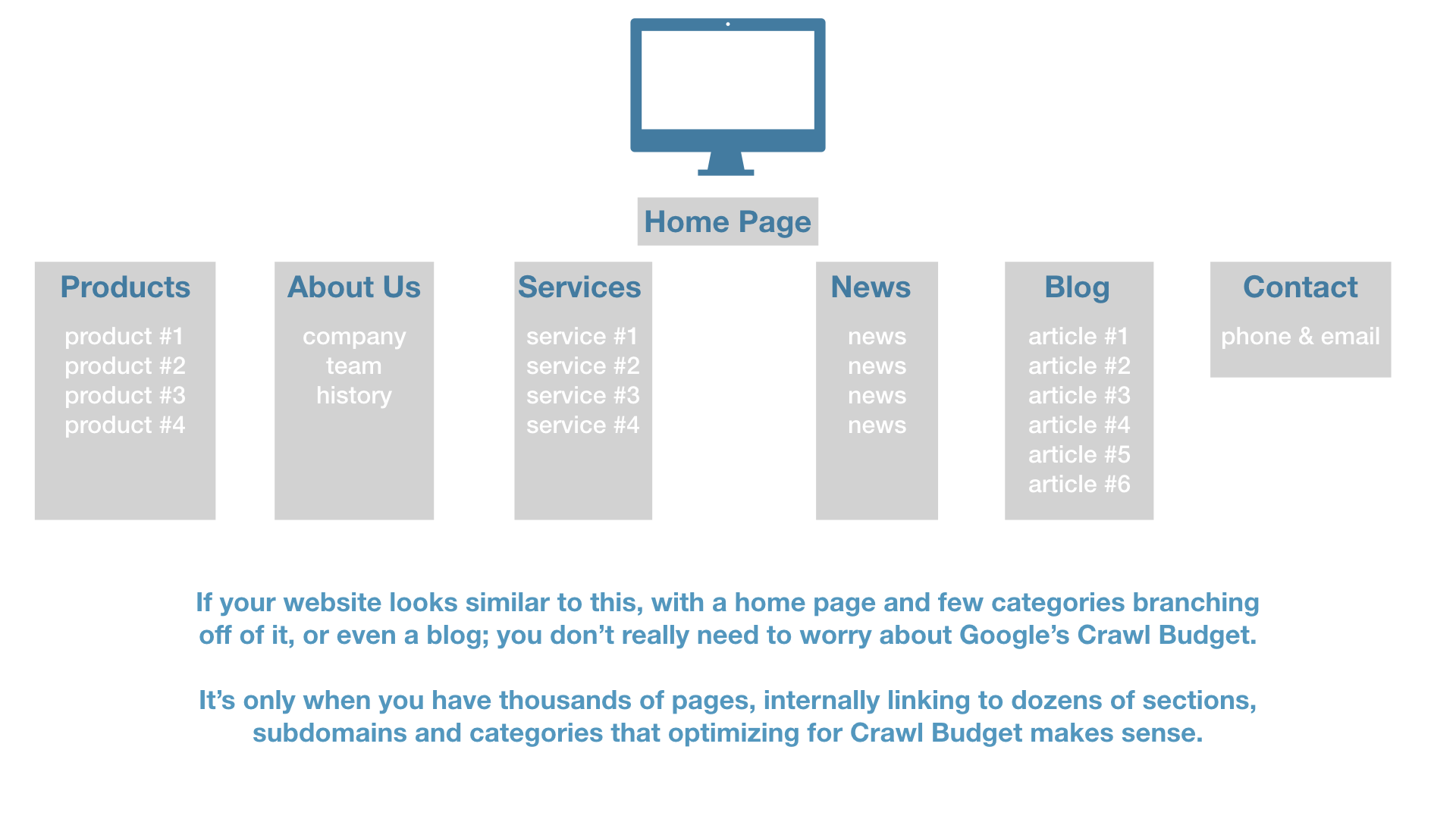

In January 2017, Google released a post on the official webmaster blog clarifying what “crawl budget” is and what it does. We are going to try and break that down for you in order to understand how this might impact your business. Essentially, in order for a website to rank in Google, the website must first be crawled so Google can understand what those pages are about. If the website can’t be crawled, it is impossible for it to rank at all. The Google Crawl budget is Google’s way of determining which pages of your site to crawl, which pages not to crawl, and how often it should come back to re-crawl. For most small businesses however, this is probably not going to be an issue.

From Google:

“First, we’d like to emphasize that crawl budget…is not something most publishers have to worry about. If new pages tend to be crawled the same day they’re published, crawl budget is not something webmasters need to focus on. Likewise, if a site has fewer than a few thousand URLs, most of the time it will be crawled efficiently.”

Notice how Google says “publishers” and not website owners? In other words, if your website is small and fairly static with little to no new content added to it, you probably don’t have to worry about crawl budget at all. Though as we all know, if you want to compete in organic search you will want to become a publisher, keeping your site updated with compelling content and thought leadership in order to attract potential customers seeking out your services. For more on content marketing, please read here.

For small business owners with limited marketing budgets, creating content on a regular basis in typically not possible or viable for certain niche industries overtaken by Adwords and PPC. But for medium to large business, capable of the bandwidth needed to compete in organic search marketing by having large websites with valuable content, crawl budget is something that should be on your radar. Read on to learn about Google’s crawl budget, and how Relentless can help you improve it.

How Are Websites Crawled and Ranked by Google?

Google deploys its website crawler called Googlebot across the entire web looking for pages, images and anything else it can find. The way Google crawls a website basically looks like this:

1. Googlebot starts crawling a website and follows the links it finds on it to other websites and then crawls those websites, repeating the process over and over. This is why links are so important to ranking in Google. The more links that point at your website, the more Google thinks you must be important. Google also discovers new pages to crawl through the use of sitemaps that a website owner submits to Google.

2. Google puts all the pages (URLs) it finds for that website and orders it in order of importance. The order of importance is determined mostly by popularity. URLs that are more popular on the Internet (linked to the most) tend to be crawled more often to keep them fresher in the index.

3. Google sets the “crawl budget” determined by technical limitations and popularity of the pages. If your website responds really quickly for a while, the crawl limit goes up, meaning more connections can be used to crawl. If your website slows down or responds with server errors, the limit goes down and Googlebot crawls less.

4. Finally, Google schedules Googlebot to re-crawl the website, based on crawl budget.

Can I See When Google Crawls My Website

Yes, you can. The easiest way to do this is to use Google’s Search Console tool. At Relentless, we use the Search Console tool (along with several other third-party tools) and server logs, to understand when Googlebot comes to visit one of our websites, how many pages it crawls, and if it comes across any server errors. Essentially, Search Console helps you monitor and maintain your site’s presence in Google Search results.

Once logged in, you can see how often Google has crawled your site, as well as the time it takes to crawl and other indexing stats.

In order to keep Google happy and have Googlebot coming back to crawl your website, it is important to keep an eye on the server time responses here. As mentioned, if your website’s server responds quickly to Googlebot requests and doesn’t return errors, it will adjust the crawl rate limit to open more connections with less time between Googlebot requests.

Remind Me Why I Should Care About This? What Can I Do?

Remember that in order to compete in organic search marketing, you need a lot of compelling, valuable content first and foremost. But in order for that content to rank, it first has to be crawled by Googlebot. URLs can’t rank unless they are indexed by Googlebot and can’t be indexed unless your website can be crawled.

Here are the bullet point action items for crawl budget.

1. Make sure pages on your website are discoverable (crawlable) either through links from third-party websites, internal links, or site maps.

2. Make sure your most important pages get the most links; external or internal.

3. Make sure your least important pages are not crawled as much. You can block pages with robots.txt if you really want to.

4. Make sure your server is fast, reliable and responsive.

Let’s talk about these in more detail.

Discoverable, Crawlable Webpages and Assets

The best way for Google to discover a page on your website is through third-party links. A link from a third-party signals to Google that this page has importance. There are many ways to acquire links for your business and website, but the best way is create content worthy of being linked to, shared and read.

Other than third-party websites, you can also your website and sitemap to Google through the Search Console.

Your Most Important Pages

Google is looking to ensure the most important pages remain the ‘freshest’ in the index. What are your most important pages? From a technical perspective, the pages on your website that have the most third-party backlinks will be considered most important. Additionally, pages on your website that have the most internal links will also be considered important.

Make sure you are getting third-party links to your most important pages, and make sure that those pages get linked to from within the site as well.

What Are My Least Important Pages?

Given what we have learned about crawl budget, we don’t want to waste any of Googlebot’s precious time crawling the site. As such, we want to make sure that Google doesn’t crawl admin pages, pages with little or no content on them.

Google recommends:

“removing low quality pages, merging or improving the content of individual shallow pages into more useful pages”.

A good exercise to do frequently, is a content audit of your website. By looking at the pages Google crawls, and also pages that your visitors spend time on, your can start to see which pages are the most important and which might be the least. If you have a page that nobody seems to care about because it doesn’t have very valuable content, but Google seems to crawl the page often, you might want to edit the page into something more useful, or remove/block the page from Googlebot.

Is My Server Fast and Reliable?

At Relentless, we talk a lot about how the speed of your website is important as customer service and also as a ranking signal for SEO. But in regards to crawl budget, page speed is entirely different than server response time.

“Page speed” refers to page load times and how long a page takes to render for a visitor. Server speed is how long the server takes to respond to the request for the page from things like Googlebot.

Google recommends reducing server response time to less than 200ms. Google says that a server response time of over two seconds can result in “severely limiting the number of URLs Google will crawl from” a site.

In addition to server responses, we want to keep an eye on server errors as well. This too can be done in Google’s Search Console.

From Google:

“Website crawl errors can prevent your page from appearing in search results.”“If your site shows a 100% error rate any of the three categories, it likely means that your site is either down or misconfigured in some way. This could be due to a number of possibilities that you can investigate:

– Check that a site reorganization hasn’t changed permissions for a section of your site.

– If your site has been reorganized, check that external links still work.

– Review any new scripts to ensure they are not malfunctioning repeatedly.

– Make sure all directories are present and haven’t been accidentally moved or deleted.”

How Can Relentless Help Me With All Of This?

At Relentless, we focus on large-scale technical SEO projects for websites that take advantage of continual content updates for marketing purposes. As with any dynamic content, additions and changes require maintenance and upkeep to make sure everything is continually optimized for Google.

Typically this would involve:

1. Comprehensive XML Sitemap inclusions to Google Search Console.

2. Content auditing for crawls and caches.

3. Review of server logs to see what pages are being crawled.

4. Internal link auditing, ensuring pages are accessible from internal links as well as the site map.

5. Weekly Google Search Console Error report checks and action items if required.

6. Monthly server optimization to improve server response time.

7. URL redirect and status code auditing.

If you would like more information about how Relentless can help you with your technical SEO, please ask us.

Posted on: 08/22/2017

Posted by: Craig Hauptman – President & Founder

Related Insights:

Search Traffic: Paid vs. Organic – The Fundamental Differences and How to Leverage Each Type

Mobile Majority – Why a Mobile-First Approach is so Important

The AIDA Model – When to Use Specific Digital Ad Platforms Throughout the Sales Funnel

A/B Testing for Continual Improvements – Split Testing for Incremental Website Improvements

AI Chatbots & Search Engines – How AI Chatbots Will Impact Search Engines